Contact info:

- Academic e-mail - israel.dilan@upr.edu

- Personal e-mail - israelodilan@gmail.com

- Github username - https://github.com/Omig12/

- Linked-in - https://www.linkedin.com/in/israel-o-dilán-pantojas-77042096

Bio(6):

"Motivational note. Craig McGill crash-landed in the acid swamps of Boreal 9, fought off arachnid kidney-poachers, and hijacked a tame starwhal. If he can do all that, you can survive one more day." - Subnautica PDA

Another another weekly-ish update:

After a failed attempt to fulfill my research goals during the last time, here we are again trying to make the world right once more.

Week 13: (08-12/Apr)

- Back at it right after the exams.

- Got word of possible short reads incoming for the Holothuria Glaberrima Selenka.

- Started working on a possible outline for the tech report.

Week 12: (01-05/Apr)

- Studying hard for this week's exams, not much work done.

Week 11: (25-29/Mar)

- Started reading up on the problem of the interpretation of alternative splicings when utilizing short reads and how this can be addressed by utilizing a hybrid approach that incorporates both short & long reads:

- In essence both short reads and long reads are combined to solve ambiguity by having sequences that contain all the region (specially at junctions sites where the exons are removed) with long reads and add robustness through higher sampling depth from short reads.

- Yeast Whole Genome Sequencing with Nanopore & Illumina

Week 10: (18-22/Mar)

- Read some and watched some videos about how Nanopore samples can be aligned.

Week 9: (11-15/Mar)

- Too many evaluations this week, here's a list:

- General Biology 1 - Exam

- Mini-review presentation

- Mini-review written report

- Fermentation Lab Report

- Compilers Assingment

- Big Data to Biology - Exam

- Not much research done, if any. :(

Week 8: (04-08/Mar)

- Had an interesting idea that formalized to possibly creating a Generative Adversarial Network based genome assembler.

- Found this recent paper on the emerging field that utilize similar approaches: Machine Learning metagenome assembly

- Possible Starting point: GANs from Scratch 1: A deep introduction. With code in PyTorch and TensorFlow

- Skimmed a few papers this week, here's a list:

- De Novo Assembly of the Sea Cucumber Apostichopus japonicus Hemocytes Transcriptome to Identify miRNA Targets Associated with Skin Ulceration Syndrome

- Trinity: reconstructing a full-length transcriptome without a genome from RNA-Seq data

- De Novo Genome Assembly for Illumina Data

- Whole genome de-novo assembly and annotation protocol for Apostichopus japonicus genome

- Ten steps to get started in Genome Assembly and Annotation [version 1; referees: 2 approved]

Week 7: (25-01/Feb-Mar)

- Journal Presentationa due this week, i'm pretty excited to show you guys what i've been reading up on. The paper I will be presenting on is the following: Next-Generation Sequence Assembly: Four Stages of Data Processing and Computational Challenges

Week 6: (18-22/Feb)

- Topics in Biology: Big Data to Biology - First research sprint is due could not work on research this week.

Week 5: (11-15/Feb)

- Fixed the ViewSonic Secondary display in the lab. :)

- Started Reading the paper I was assigned for the journal club.

Week 4: (04-08/Feb)

- Behind on Compilers Class Lexer assingment must catch up ot it, no research done this week.

Week 3: (28-01/Jan-Feb)

- Performed a search for possible papers to present during journal club

Week 2: (21-25/Jan)

- Disscused Possible reasearch topics

- Possible Pojects:

- De-nove Genome Assembly of Sea Cuecumber

- Genomic Sequencing pipeline development

- Butterfly multiple data integration software approach:

- Gene expresion, RNA-Seq, Chip-Seq & Transcription Factors

Week 1: (14-18/Jan)

- Joined Megraprobe

Bio(5):

"War... war never changes!" - Ron Perlman

Another weekly-ish update:

This time around we'll be working with something a bit non-bioinformatics, this trimester (u_u) we'll be working with some cybersecurity. The project will focus on analysing the encryption and protocol for private gossip on the awesomely decent(ralized) scuttlebutt network.

Week 7: (23-08/Jun)

- Due to unforeseen and undue events I made the hard decision of discontinuing my attempt of researching this trimester.

Week 6: (16-20/Apr)

- Should get something meaningful graphed.

Week 5: (09-13/Apr)

- Still wrapping my head around Fourier Transforms in the context of this research, althought it is more clearer now.

- Should learn a lot more about Fourier Transforms.

- Added Reasearch.md to help clarify research goals.

- Added sbotcli_usage.md as a guide for using sbot to navigate the gossip.

Week 4: (02-06/Apr)

- Finally got sbot installed following this guide.

- npm is very problematic sometimes

- Do not remove python3 from your ubuntu box it might (probably will) break it

- Discussed possible implementations of Fourier transform analysis in intended research setting.

Week 3: (26-28/Mar)

- Studying Python program chunk.py! provided by Prof. Humberto Ortiz

- Tried to analyze a fragment of private feed, no dice

Week 2: (19-23/Mar)

- Reading up a lot on private box

- Explored around Scuttlebutt's Installation and possibly useful files

Week 1: (12-16/Mar)

- Reading up on scuttlebutt in general, ScuttleButt-Protocol Guide

- Found out about private box and encrytion hash convention used by scuttlebut

Bio(4):

Guess who's back, back again

Shady's back, tell a friend

- Slim Shaddy

NEW-New-new weekly weekly update:

- Man I wanted to say that!

During this semester we'll be working on a shared transcript discovery tool that observes the de-bruijn graph obtained from different transcripts in order to analyze differential expression based on reads from sea cucumber RNA, in order to study the parts of it's genome that are related to it's ability to regenerate some of it's tissue.

Sea Cucumber or Holothuria glaberrima Selenka

Image taken from BMC genomics [article](https://bmcgenomics.biomedcentral.com/articles/10.1186/1471-2164-15-357)

Week 14: (26-30/Feb)

- Classes have oficially ended

- Software and report have been disscussed, reviewed, and finalized

Week 13: (19-23/Feb)

- Last lab meeting of this semester

- We dicussed the technical report

- We dicussed the final details of the modifications to the algorithm and programs

Week 12: (12-16/Feb)

- Verified optimized algorithms

- Discussed future directions for the software created and the research

Week 11: (05-09/Feb)

- Discussed possible approach for future research goals

- Approach to comparing sequences utilizing a Differential Expression Coefficient

- Implemented some optimizations to algorithm (Python generators anyone)

- Went over technical report with Kevin

Week 10: (29-02/Jan-Feb)

- Talked about trouble with generating sample data for validation

- Looking for possible optimizations to algorithms

Week 9: (22-26/Jan)

- Missed meeting due to work

Week 8: (15-19/Jan)

- Missed meeting due to work

Week 7: (08-12/Jan)

- Meeting suspended this week

Week 6: (01-05/Jan)

- Meeting suspended this week

Week 5: (25-29/Dec)

- First birthday ever spent in school such a weird feeling, thanks to everyone who took part in making it a special day.

Week 4: (18-22/Dec)

- Branched the program for possible GFA2 adaptation

- Dicussed possible approaches to differential expression on this software

- Biological significance of data vs. memory efficiency and performace (time complexity)

- Ease of interpretations of results

- Future uses of data generate, to log or to garbage collect that is the question

Week 3: (11-15/Dec)

- Settled on using GFA-1 format specifications for output

Week 2: (04-08/Dec)

- Started reading up on the Sea cucumber paper

- Transcriptomic changes during regeneration of the central nervous system in an echinoderm

- Started tinkering with the previously mentioned algorithm goals:

- Add some counting methods to algorithm - WE GOT IT

- Evaluate possibility of parallelization Files that will prove useful:

- Gist for work in progress

- GFA file format specification

- Bandage for vizualizating the de-bruijn graph

- De-bruijn graph by pmelsted

- Edge cases of De-bruijn graph by pmelsted

- Debuggin De-bruijn graph by pmelsted

Week 1: (27/Nov-01/Dec)

- Had an unofficial first meeting with collaborators

- Drew project goals

- Discussed possible approaches for the study

- Partially analyzed the code in pmelsted de-bruijn graph generator

Bio(3):

New-New Weekly update:

iBRIC summer internship at University of Pittsburgh, it's gonna be a blast and I'll keep you posted.

Image taken from Google

Week 10: (24-28/July)

- Mock presentations due Monday and Tuesday

- Presentation to Department of Biomedical Informatics on Wednesday

- Poster due Wednesday

- Research presentation due Wednesday

- Poster presentation @Symposium due Friday

Week 9: (17-21/July)

- Finally got some data on the comparison of the algorithms

- Data seems to favor our hypothesis

- Some errors where pointed out running analysis again

- Final Poster Crunch time

- Research presentation Crunch time

- Weekly meeting with my mentors, we discussed:

- Preliminary results

- The poster draft

- Due to time constrains we won't be working with the Sachs data

- EVERYTHING IS DUE NEXT WEEK EEEKKK

Week 8: (10-14/July)

- Research topic update:

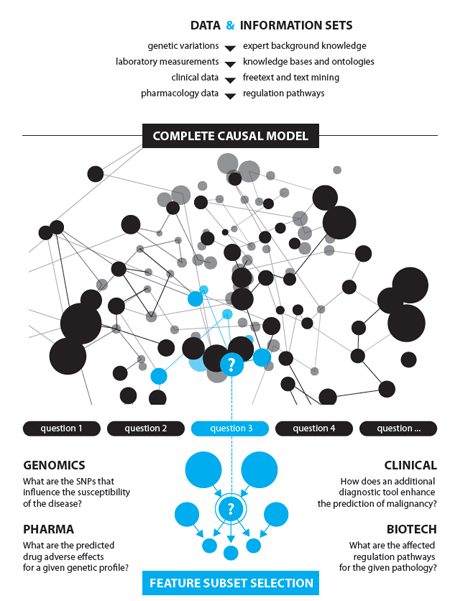

- Enabling Constraint-Based Causal Discovery Algorithms to obtain more information from observational and experimental data by performing interventions.

- Research round-table #2 went great.

- Polished and submitted abstract

- Modeling interventions in causal networks to enrich constraint-based causal search

- Weekly meeting with one of my mentors, we discussed:

- Relevant hurdles we need to address to improve the effectiveness of the algorithms

- Research direction for the next few weeks

Week 7: (03-07/July)

- Read part of a paper suggested by my graduate student mentor

- Joint Causal Inference from Observational and Experimental Datasets (Magliacane, Sara et.al.)

- Worked on the abstract because it's due next Wednesday, July 12th

- Created some graphs that'll hopefully be useful for the poster

- Broke my operating systems graphical user interface (Ubuntu 16.04.02 LTS) by messing with the drivers

- Nvidia 304 Driver broken package

- Unistall the package and reinstall Ubuntu-Desktop

- Used "$ startx" which changes the ownership of the xserver to root so noone else can use it :(

- "sudo chown $USER:USER .Xauthority" should fix it

- Fixed it on the weekend

Week 6: (26-30/June)

- Watching most BD2K video resources on working with statistics, big data, & causal modeling

- Found this awesome paper/primer by Chris J Needham, James R Bradford, Andrew J Bulpitt, and David R Westhead, Fran Lewitter (Editor) to aid me in the task of understanding and explaining causal discovery research

- The date for the presentation of the abstract approaches, an early draft can be found here

- Research round-table came up this Week, it went great, through constant discussion of my research topic I am able to refine my understanding of my work and it's importance, which in turns helps me a lot in presenting and advancing it

Week 5: (19-23/June)

- Reference and Citation management talk

- Ethics Forum presentation at Duquesne University:

- Michael A. Bellesiles Ethics Case

- Weekly meeting with graduate student mentor

- Talked about representing experimental data in causal software

- Currently playing with his code for interventional data in Tetrad than can be found in his repository

- Working on understanding the relevance and implications of separating interventional/experimental data from observational data on the causal models.

- Reading section 5.4.2 from Causation, Prediction, and Search by Peter Sprites et al. to better understand the PC Algorithm

Week 4: (12-16/June)

- Center for Causal Discovery short course

- Main topics Discussed:

- Causal Networks

- Bayesian Statistics

- Bayesian Causal Networks

- Tetrad Causal Discovery Software

- Datathon Thursday

- Journal Club Meeting on Friday

Week 3: (05-09/June)

- During this week, I was instructed to do the following:

- Get the Tetrad software up and running in my computer

- Read up on and understand the (Peter Spirtes and Clark Glymour) PC Algorithm

- Observational Data Representation within causal networks

- Experimental Data Representation within causal networks

- Ethics Forum Group presentation on Michael A. Bellesiles Case Arming America coming soon.

- Research topic update:

- Methods for integrating both Observational and Experimental Data in Constraint-Based Causal Discovery Algorithms for Causal Discovery in Causal Bayesian Networks.

Week 2: (30/May-02/June)

- During this week we have been asked to write a brief proposal due Friday 2nd.

- Proposal

- Reading a lot of literature in order to understand Causal Bayesian Networks, d-separation and the Causal Markov condition.

Week 1: (22-26/May)

- Was assigned to Dr. Gregory Cooper's Lab at the Pitt Department of Biomedical Informatics in the Center for Causal Discovery.

- Was required to complete ethics in research training modules.

- Responsible Conduct of Research

- Biomedical Informatics Research ethics

- Research topic:

- Causal Discovery utilizing Causal Bayesian Networks Algorithms

- Started reading up on all the required statistics and graph theory required to work with the topic.

- Currently reading the first chapter of:

Glymour, C. N., & Cooper, G. F. (Eds.). (1999). Computation, causation, and discovery. Aaai Press.

Bio(2):

I have respawned :) Muahahahahhah! No but for real, I'm back, doing research at the Megaprobe-Lab in the hopes of expanding my knowledge of bioinformatics, and general programing.

New Weekly UPDATE (Jan 2017 - May 2017)

Week 11-19:

- Classes still interrupted.

Week 10: (20-27/Mar)

- Campus went on strike protesting budget cuts.

- Classes suspended till further notice.

Week 9: (13-17/Mar)

- Starting to write Python Makefile Snakemake tutorial

- Snakemake documentation

Week 8: (06-10/Mar)

- Professor suggested utilizing SnakeMake so far it's a really good prospect.

- Read this SnakeMake paper for more info.

Week 7: (27/Feb-3/Mar)

- Found a more pythonic way of finding information about device resources while reading up on the python standard library documentation.

- Found interesting alternative to make called Do-it.

Week 6: (20-24/Feb)

- Very anxious this Week.

- Student strike.

- Got sick :'(

Week 5: (13-17/Feb)

- Did not work in the lab this week.

- Most time was spent studying.

Week 4: (06-10/Feb)

- Pipeline device resource detection script:

- Return available CPU cores

- Return available RAM free

Week 3: (01-05/Feb)

- Working on pipeline makefile.

- Learning about Trinity.

Week 2: (25-29/Jan)

- Started work on pipeline makefile.

- Looking at Diginorm.

Week 1: (19-22/Jan)

- Started reading up on the khmer paper!

- Joined the Lab :') YEY!

Bio:

I confess my crimes against humanity and Eleutherodactylus coqui races alike, for I have vanquished the furious lab frog.

Now, I am become Death, the destroyer of worlds. J.R. Oppenheimer

[ ]

]

Research Goals

Create and analyze correctly obtained De Bruijn graphs from de-novo sequence assemblies utilizing mutual.

Weekly UPDATE (Jan 2016 - May 2016)

Week 17: (09/May-13/May)

- Got down to working on the technical report.

- Cleaned and updated the repo:

- Improved formatting on documents.

Week 16: (02/May-6/May)

- Worked Hard on fixing parser issues.

- Got some output, lightly checked/skimmed output for errors, did not find any.

- Continued working on technical report.

- To do:

- Finish technical report.

Week 15: (025-29/Apr)

- Began corroboration of correct parsing of LastGraph file:

- Noticed wrong data being displayed in parser's GFA's output of Read Count.

- Noticed Problem in Link report reported from Bandage's output vs. Parsers output.

- Noticed Problem in reported Link's nodes orientation from Parser.

- Changed the way parser stored the data from first storing it in an array to now utilizing networkx's built-in structure for storing attribute.

- Fixed wrong read count report.

- Kinda theoretically fixed links being reported and node orientation.

- Parser is temporarily broken due to issues with networkx structure.

- To do:

- Fix issues that are preventing the parser from executing correctly.

- Continue technical report.

Week 14: (18-22/Apr)

- Started working on LastGraph parser in python:

- Reads file

- Finds NODE

- Appends to list

- Finds ARC

- To done :)

- Write all contigs obtained from De-Bruijn

- Determine format for output

- Presumably got a working parser now.

- New to do:

- Corroborate parser works

- Keep improving it

- Initiate technical report

Week 13: (11-15/Apr)

- Hulk got back up (still has some fight left).

- Ran Mutual twice simultaneously:

- A) With the purpose of comparing organism's A (input velvet/oases output [velvetA]) De-Bruijn graph with organism's B (input velvet/oases output [velvetB]) De-Bruijn graph after the data had been cleaned with sickle and scythe.

- B) With the purpose of comparing organism's A with organism's A (self comparison) expecting duplicate output from Mutual (outputa.fa = outputb.fa).

- Created a database using Blast's formatdb on Mutual's output (outputa.fa) to compare other .fa files against it.

- Blasted through Mutual's output (outputa.fa) database against Velvet's ouput (contigs.fa)

- Blasted through Mutual's output (outputa.fa) database against Mutual's ouput (outputb.fa)

- To do:

- Analyse results see if comparisons results were accurate.

Week 12: (04-08/Apr)

- Many evaluations this week also :'(, worked mostly on preparing a bioinformatics presentation that aids in explaining Mutual's Heuristic Pairwise Alignment algorithm implementation.

- Was corrected by professor need to parse Velvelt's LastGraph file (that is to say it's De-Bruijn graph), not the fasta/fastq file.

- Good news everyone! Found a LastGraph parser in written Ruby. Bad news everyone! I don't understand ruby. :{

- Tried to ran Mutual but The Hulk(server) is down.

- To do:

- Turn to Velvet/Oases De-Bruijn parser.

- Need to run clean data on Hulk.

Week 11: (28/Mar-01/Apr)

- Utilized Bandage to create a few graphs of interesting contiguous nodes.

- Experimented a few forms of output including GFA extensions to graph.

- Started reading up on parsers for fasta/fastq files. Using Biostars https://www.biostars.org/p/710/ Guides and Nacrokill's https://github.com/NacroKill/dmel-ercc-diff/blob/master/Tophat_Birnavirus_Confirmer_2.0.py parsers for reference.

- Taking a bit of a break many exams this and the next week.

Week 10: (21-25/Mar)

- Holy Week/Spring break.

Week 9: (14-18/Mar)

- Ran clean data through Velvet/Oases.

- Graphed Output of Organism A.

- Noticeable results on graph.

- Preparing to run files on mutual.

- Student assembly paralyzed work.

Week 8: (07-11/Mar)

- Git repo is finally in order. :)

- Finally got to quality trimming:

- Needed Illumina Adapters FASTA files to remove adapters, found them in Trimmomatic source code. http://www.usadellab.org/cms/?page=trimmomatic

- Helpful note utilize FastQC to help determine how to quality control your reads. Especially figuring out the encoding (In this case Illumina/Sanger Encoding).

- Apparently scythe supports gunzipped fastq files.

- Finally removed Adapters

- Finally trimmed Edges

- Created quality reports using FASTQC for each step.

- To do:

- Verify if quality can be further improved.

- Re-run mutual with clean data and graph outputs

- Close to source code examination.

Week 7: (29/Feb-4/Mar)

- Reinstalled Ubuntu. :'D (So shinny!)

- Updated Weekly.

- Many a exams this week.

- Began reading "The Cartoon Guide To Genetics" by Larry Gonick & Mark Wheelis.

- Analyze best way to compare outputs from velvet/oases and mutual.

- Options:

- Find a way to graph mutuals output compare graphs with velvet's.

- Also possibly compare with output of other that does analysis between multiple organisms.

- Utilize blast to compare each contig.

- Utilize clustel to compare contig alignment.

- Still to do:

- Remove Adapters with Scythe.

- Trim edges with Sickle.

Week 6: (22-26/Feb)

- Fought with git and github's repos in an effort to sync files, failed.

- Switching strategy: Prioritizing some file to be copied and leaving other ones behind stored only locally in both hulk and personal machine.

- Installed Scythe and Sickle.

- Graphed Contigs from velvet's LastGraph utilizing Bandage.

- Local Computer's Ubuntu OS broke (RIP) :'( Thankfully to prior advice given in a lab meeting, had created multiple back ups of research data.

- Read a few more papers concerning bioinformatics and its history.

Week 5: (15-20/Feb)

- Worked on creating research documentation and a research log:

- Wrote FAQ to help ease the reproduction of this research.

- Experienced many issues syncing local and remote git repo.

- Verified Mutual's output using two different blast services both returned correctly identified nemastotella similarity.

- Verified Quality of input files using FastQC, file are very high quality reads.

- To do:

- Visualize contigs using Bandage.

- Trim Illumina adapters from input sequences.

Week 4: (08-12/Feb)

- Setting up Hulk server to run mutual. (Still missing velvet)

- Battled with Hulk to run mutual.

- Led to a crash :'( after two days of work.

- Finally got it down, ran mutual and got some output.

- Initiated analyzing phase, to do:

- Verify Mutual's output utilizing blast http://blast.ncbi.nlm.nih.gov/Blast.cgi and blastn http://genome.jgi.doe.gov/pages/blast-query.jsf?db=Nemve1

- Clean up initial data by removing adapters using Sickle and Scythe http://bioinformatics.ucdavis.edu/research-computing/software/.

- Visualize mutual outputs with the help of bandage https://github.com/rrwick/Bandage.

- Started working on a more readable and reproducible version of the documentation for this research.

Week 3: (01-05/Feb)

- Initiated test running of mutual on local (DELL Inspiron 7548) machine, utilizing sample Sea Anemone Dataset.

- Estimated run-time: 72 hour.

- Expected output: A transcript with very long contigs due to the high similarity of the two test organisms.

- Results: Two empty output files. >:'(

- Possible issues: Computer might not meet requirements to performs such heavy work very efficiently.

- Path of action: Proceeded to move operations elsewhere, The University of Puerto Rico's Computer Science Department very own Hulk.

- Rest of the week(get it?) due to health problems.

Week 2: (25-29/Jan)

- Installed required programs (velvet/oases) to test out mutual.

- Acquired Sample Data Set of Nemastotella Embryonic Transcriptome (Starlet sea anemone). https://darchive.mblwhoilibrary.org/handle/1912/5613

- Working on glossary of Bioinformatics related terms.

- Continued reading up on de-novo sequences, mutual/velvet/oases and De-Bruijn Graph assembly of short reads.

- Test ran mutual.

Week 1: (19-22/Jan)

- Started reading up on de-novo sequencing and familiarizing with the terms used around it.